By Ramon Forster • Jul 18, 2018

In Digital Asset Management and Content Management, many voices suggest that one of the most tedious tasks and biggest pains is relieved thanks to artificial intelligence (AI): tagging digital content. But while there is big potential in AI for auto-tagging content, most eye-catching promises of vendors miss the most important pieces that you need to bring in place first: a solid information architecture.This article intends to provide some advice for using AI technologies for auto-tagging digital content and discusses important limitations and caveats.

Artificial Intelligence: Expectation vs. Reality

If you’re just into managing holiday pictures and stock images then auto-tagging will help you find those images. Here, a big storage space with Google Drive or Microsoft OneDrive might be all you need because these vendors have pretty good artificial intelligence built in – for almost no money.

But if you have many different types of images, videos and other content of your products, components, events and key personnel, then auto-tagging will be okay for some types, but rather useless for others because it will not help you find a particular product XYZ better than before. And you also don’t want to just search “people” and then scroll down a few thousands pictures with people on it just to find your board members all at the bottom.

That said, auto-tagging can really help you, and will do this ever better in the future. But you also have to commit serious efforts in doing your homework first. Auto-tagging isn’t the goal – it merely automates some pieces in a process. And the outcome of such a process is only as good as your information architecture.

Thoroughly defining your information architecture and your content management process first will pay out many times and in many different ways, as I will show later.

How Auto-Tagging Works

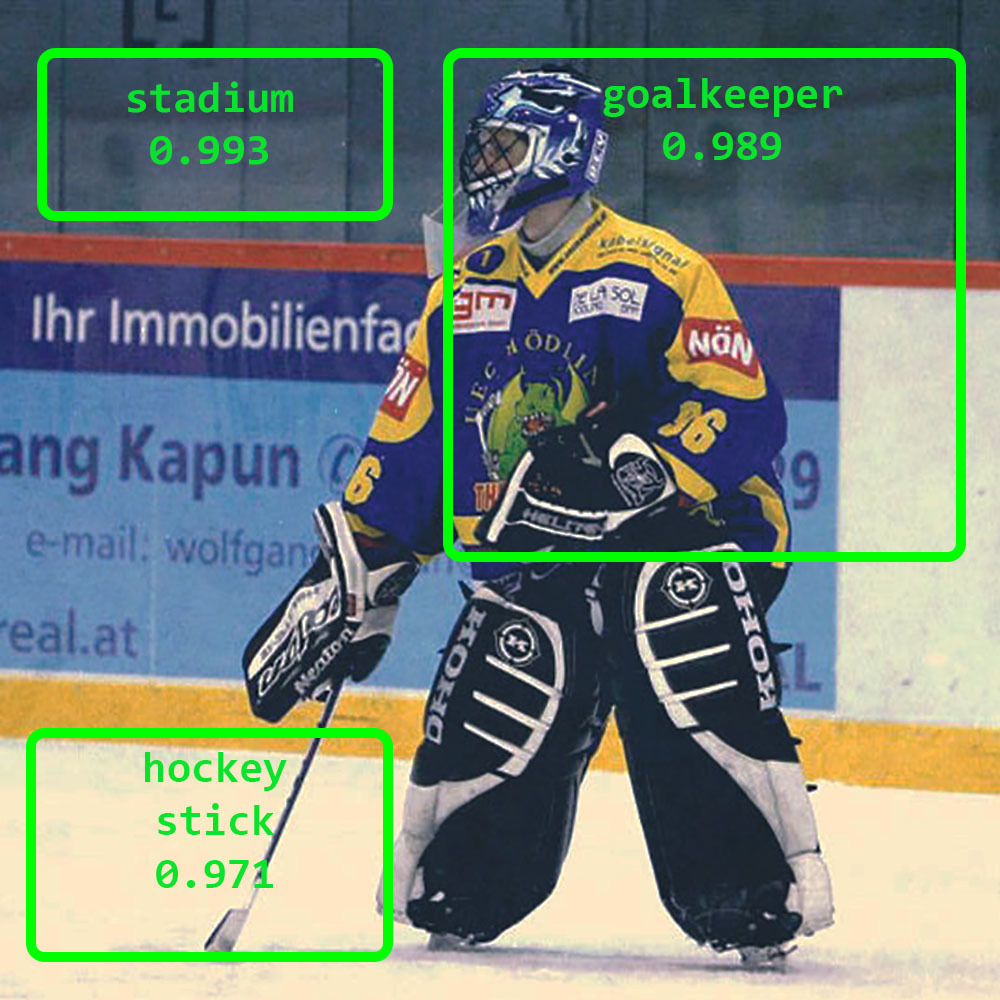

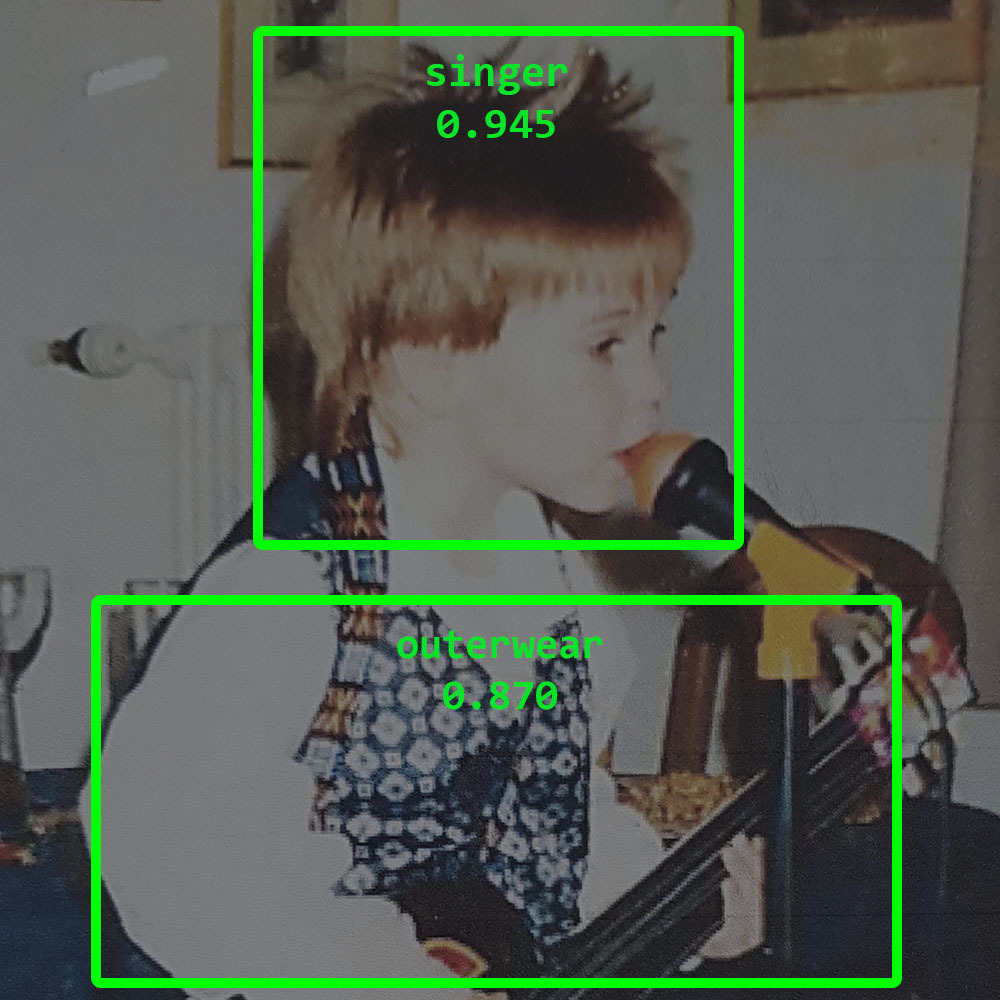

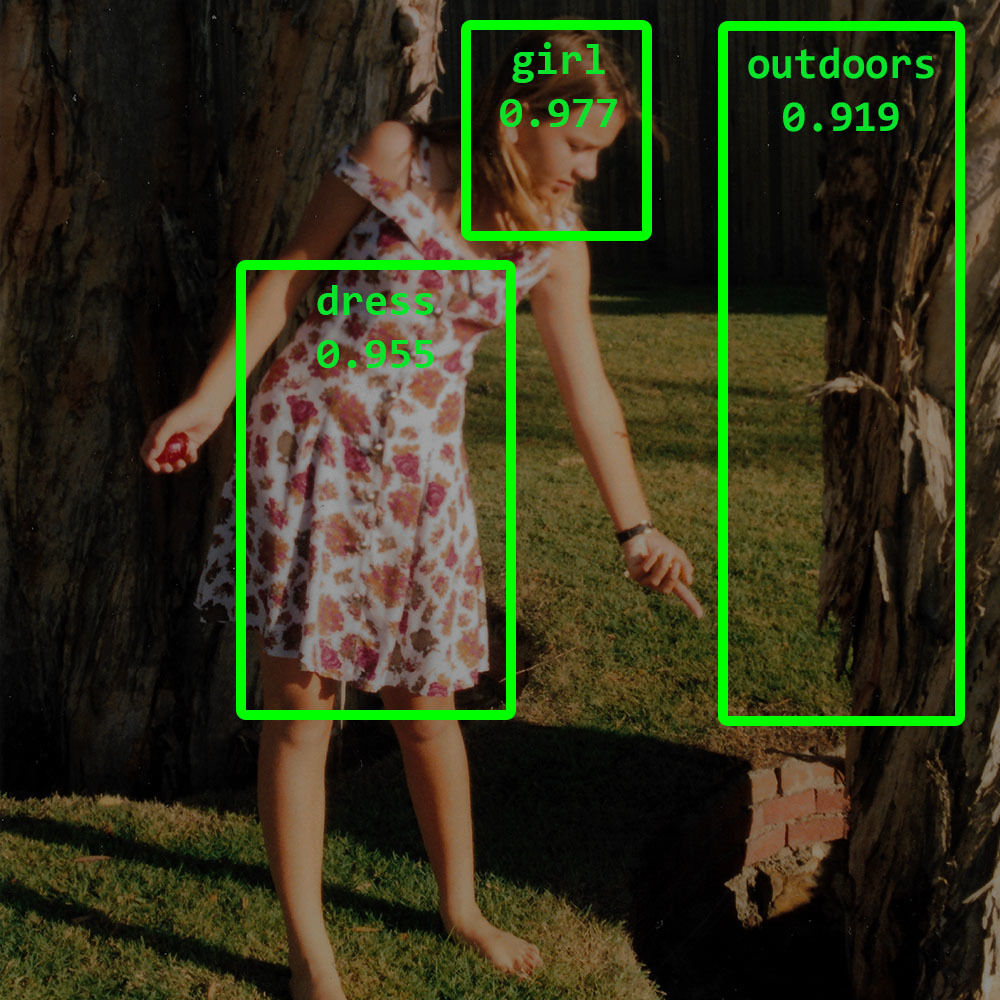

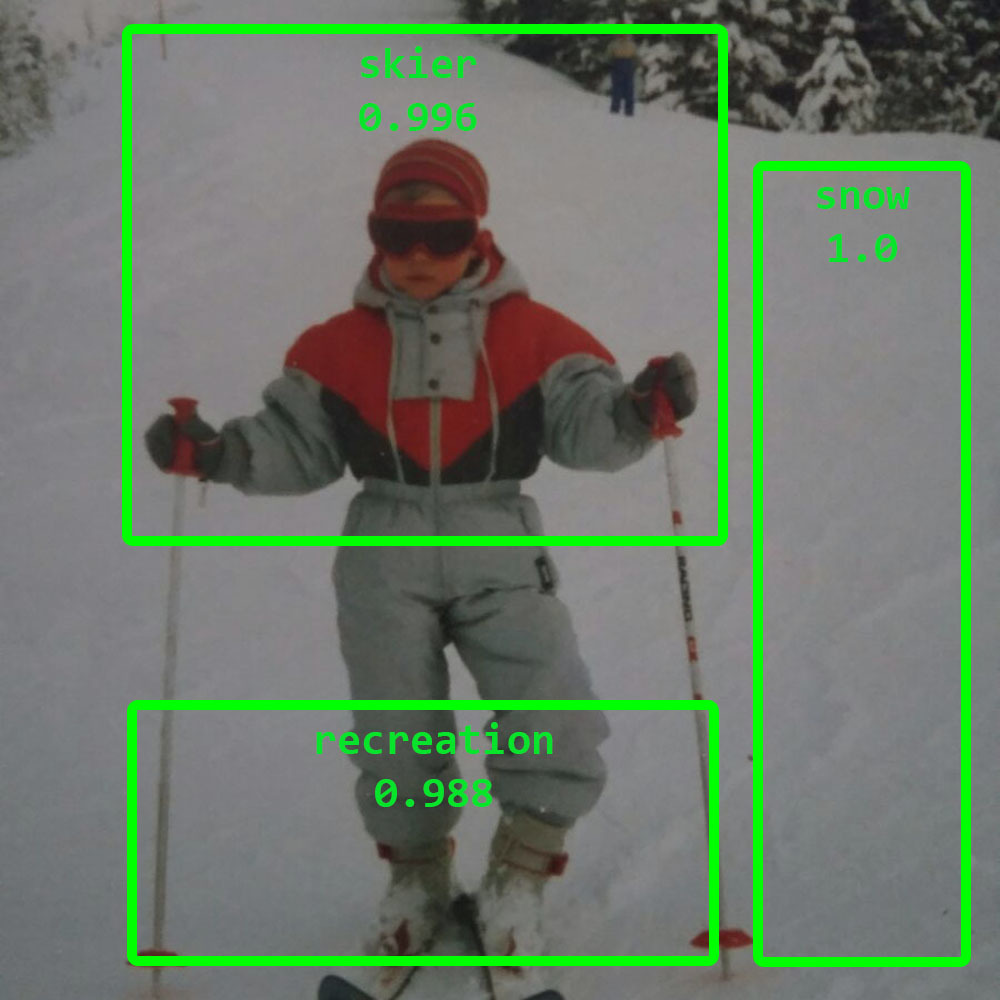

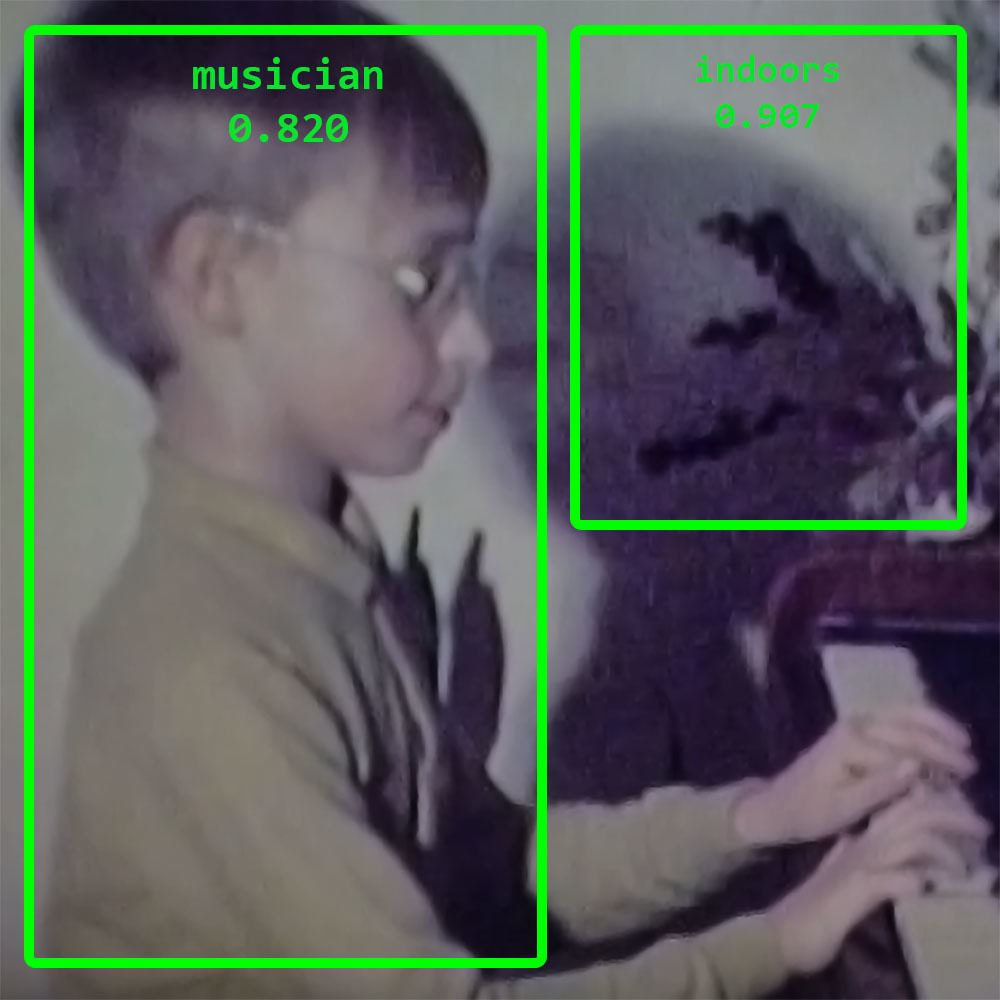

Artificial intelligence usually examines vectors, shapes, colors and other visual marks of an image, and runs these patterns by a reference library for finding out the name in text format of identified objects. For each match, a tag with a confidence score is returned which tells how certain the algorithm is it made the right match.

Knowing that something is a computer with an apple shape on it helps draw the line between Apple, the brand and apple, the fruit.

Advanced algorithms also bring identified objects into relationship with each other and factor this into an improved result set: Knowing that something is a computer with an apple shape on it helps draw the line between Apple, the brand and apple, the fruit.

AI algorithms are good at identifying generic content such as fruits, mountains, people etc. But they are also becoming increasingly better in differentiating on details e.g. by telling that it’s a granny smith apple, Mount Everest, or a famous public person.

You also can train models so that AI providers for image recognition know that this is component X of machine Z. But they’re much weaker on this and most algorithms also struggle in understanding what’s really relevant on the picture because they lack e.g. historical or advanced situational context.

Information Architecture: The Importance of Strong Foundations

Whether you want to use AI now in your digital asset management or content management strategy or wait until these technologies improve further – either way should start with building your information architecture.

An easy way for starting is to ask yourself the following key question:

- What types of content will you manage?

This sounds trivial if you just segment into “images, videos and pdfs”. But try to really define the types of content that will be captured in your images and other file types: product content, event content, employee content, generic stock content etc.

Once you’re clear on this, please answer at least the following questions per each type of content you had previously defined:

- Which metadata will you need to capture at the minimum?

- Which pre-defined taxonomies will you use for each type of metadata?

- How accurately will you need to tag the different metadata?

- Are you permitted to use a 3rd party cloud service for auto-tagging?

- Which other resources (humans, systems) could you use for tagging?

This list of questions is not complete and doesn’t go into all the nitty gritty details but it can help as a starting point. (You also might want to get some help from a digital asset management or content management expert via the DAM Guru Program).

Answering above questions properly will be crucial for helping you in better understanding the implications as further spelled out below.

Matching auto-tags with your taxonomy

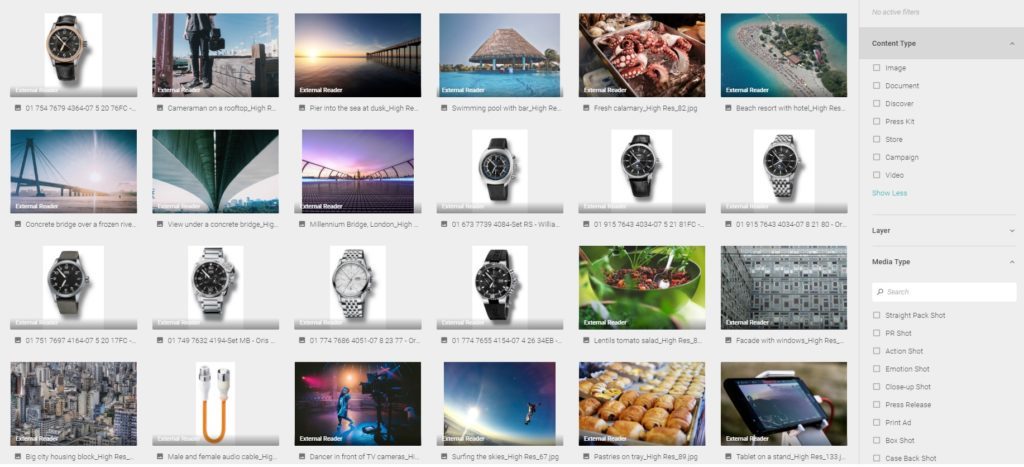

It’s important to understand that auto-tagging services always return simple “tags” to you (text strings) only, and most content systems just store that information somewhere in a free-text field. This helps you when executing a simple generic search query but not necessarily when you want to find specific content based on your specific vocabularies.

If you want to improve findability and guide users during the search process through your large amounts of content then you will end up providing them with metadata filters – just like every e-shop does. These filters (or “facets”) will be segmented e.g. by type of content (product vs. stock), type of media (image vs. video) and conceptual categories (e.g. geography vs. human beings).

If you want to gain such an advantage for guiding the users via filters then you have to match the tags returned by an auto-tagging service with your taxonomy, so that “women” returned from the auto-tagging service matches your broader “people” tag.

Even better though if your content system supports multidimensional metadata and semantic relationships because then your “people” tag can contain all kind of additional information, and e.g. link to the “women” tag which again semantically links to “girl”, “female”, “mother” etc. and all their translated values.

Such multidimensional metadata are especially helpful when working with product information. And if you have a Product Information Management (PIM) or Enterprise Resource Planning (ERP) system in place which masters your product information, you won’t even need “AI voodoo” at all.

Auto-tagging without Artificial Intelligence

A very classical yet often forgotten workflow in digital asset management enables you to “auto-tag” all your product images and videos by simply standardising your file names or XMP metadata that are written into the file headers. Define a naming convention and add your unique product reference number (SKU) to it e.g. with a preceding identifier for this to be a product code, like:

- JetX3467-with-operator_PID12384689234.jpg

- PID12384689234_X3467 movie.mov

- WeldingJetX3467 leaflet-PID12384689234-new-june2018.pdf

In the end, all these little efforts will be traded off by huge cost savings you gain through higher degree of automation (and AI won’t help you much in these product domains, at least for now).

Whether you adhere to a strict guideline or you just ask to always add the product ID with separators (as highlighted in the sample: It doesn’t matter if your content system is capable of extracting it automatically from the filename or a defined XMP field and matching it up with your corresponding product list.

This list then ideally also defines the product brand, category, areas of applications, product life cycle categories, market availabilities etc. which all can come imported from your PIM or ERP as data master too. Now your users can search by vertical or product brand, and they will find all related images, videos and other resources – all based on auto-tagging content with a single product identifier.

If your content system is capable of using multi-dimensional metadata, the addition of a single “product identifier tag” can automatically add-in a wide range of different relevant product information associated with that tag.

Using semantic relationships, you can also easily connect together your freshly added content with other related items of content that you already have stored in your content system.

Granted: this requires a little bit of standardized production workflows in order to ensure product IDs travel with every file that contains specific product content. But unless you manage only a handful of products you will have all information at hand anyway, and this standardization will be nothing compared to other standards in place when actually manufacturing your products.

In the end, all these little efforts will be traded off by huge cost savings you gain through higher degree of automation (and AI won’t help you much in these product domains, at least for now).

Trigger Extended Content Workflows

Adding tags automatically isn’t always the final destination – it can be part of a workflow chain and trigger tasks which can save you a lot of time and money you’re not aware of at first sight.

For example, if your tagged content returns “people” you might want to sort this out and manually check if and for which uses you have received explicit consent from that individual pictured on the image, video or other content. Without such explicit consent and declaration of use to users, you risk high fines under the GDPR and other regulations, as we recently discussed in our blog “Digital Asset Management in the light of the General Data Protection Regulation”.

But the same keyword “people” might also trigger an inspection with regard to safety requirements: Are all people on the construction site wearing helmets? Does the person at the drilling machine wear safety glasses? If not, then you might not be able to use them for distribution without risking your reputation.

Some pictures or videos of events might also contain logos of competitors in the background which auto-tagging providers can identify. You don’t want to spread such images all over, and better screen these out before they hit your content portals or get published on your websites.

Weed out Content Based on Negative Terms

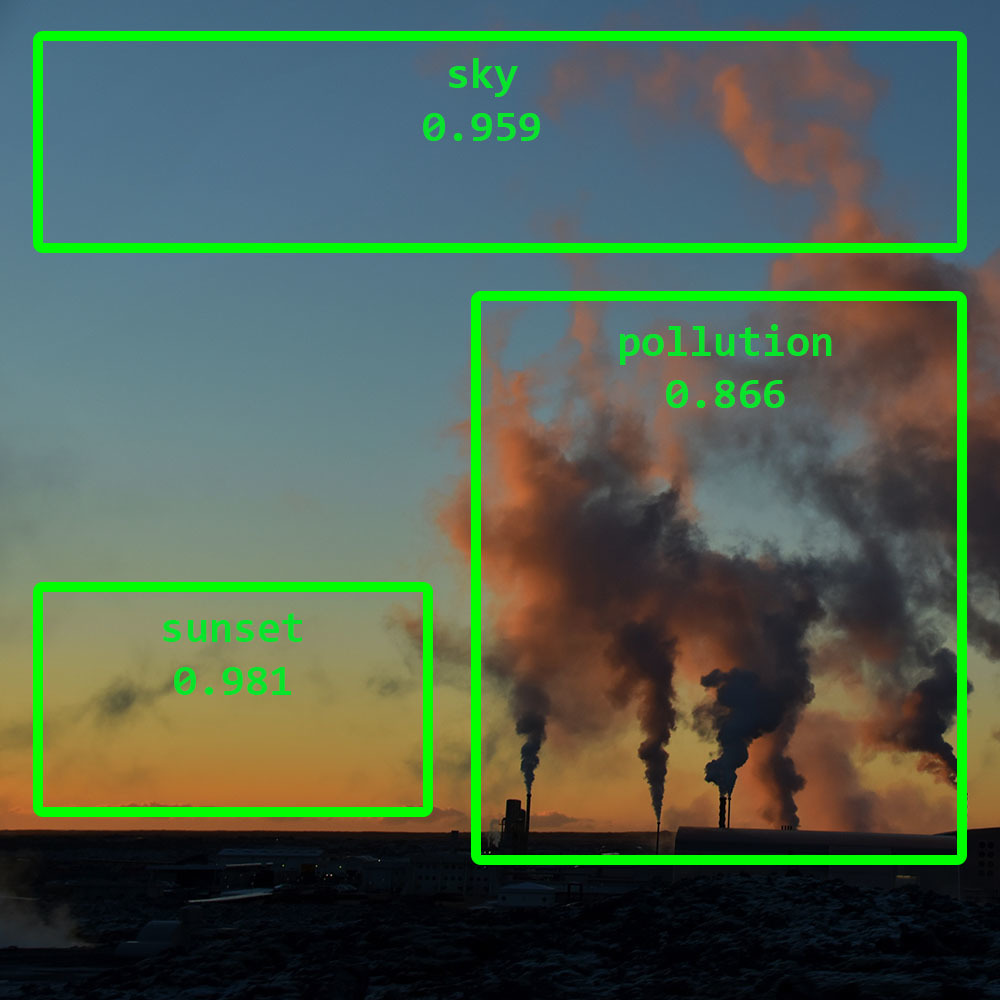

You might also want to compose a list of tags which trigger an alert if found. If for instance you are a petrochemical company and your refinery pictures at sunset are all tagged with “pollution” then you might want to consider if publishing these pictures are a good thing.

In any case, you don’t want to show that term “pollution” just right next to the picture to all users.

This raises the bigger question how your content system stores those auto-tags, and if it can still find them when those tags are hidden to users. And of course: if you can also remove those auto-tags or not.

There might be many more scenarios where you want to trigger a manual review of the content by a particular subject matter expert based on “negative terms”.

Take it out for a Test Drive: Trialling an Auto-Tagging Service

Once you have worked on your information architecture, you might want to take a representative group of images or videos and trial a tagging service such as Clarifai, Google Vision API, Amazon Rekognition, Imagga or Azure Cognitive Services. Run a few batches within their free tiers. This will help you better understand what is basically possible, and it will also have you start thinking of tagging that triggers further processing.

No Human Review? Always Flag it as Auto-Tagged

Based on your your defined information architecture, some types of content (e.g. stock images) are just fine to be auto-tagged because a) that’s much better than not finding the pictures because they’re not tagged, b) the risk is small that something can go really bad, and c) cost savings are huge when not touching them all manually.

Whenever you only auto-tag content without human review: declare it transparently to be “auto-tagged”. Otherwise users might get confused why something is called what it clearly is not (or should not be called), and you’ll lose credibility for your curated content collections.

Looking to the Future: Plan Ahead for Change Management

If you are looking into using Artificial Intelligence for tagging content with metadata, please do it with a long-term perspective.

AI technology advances fast and what shows up with a low confidence score today might be properly identified in the future.

AI technology advances fast and what shows up with a low confidence score today might be properly identified in the future. Hence you should build your information architecture in such way that you can revisit tags in a year from now by sending all or select contents to the tagging service again (which requires you to store at least when the last auto-tagging was done and which of the tagging services was used).

Keep change management in mind for such differing results over time.

Train Your Machine (Machine Learning, ML)

One of the biggest sources of future improvements in auto-tagging is what the machines learned from human users: Most tagging service providers enable customers to train the tagging models by sending the service content and refining the returned tags manually, or adding those which are appropriate. By analyzing the content and the tags you added or removed, the services can use their Machine Learning (ML) algorithms to improve future results.

The better such “feedback” is integrated into your content system, the more effortlessly you will train the tagging service and the more accurately it will tag your specific content (note that you can also get very weird results through ML, and it’s far from from ideal).

Always Read the Small Print

Be cautious when using auto-tagging service providers or when training them because their small printed legal terms can be an issue. You might grant them the right to reuse some of your content and you might not want to expose critical IP like new product blueprints, investor information etc.

Again: With a proper information architecture put in place for your digital asset management or content management initiative from the beginning on, you just prevent all these types of content from being sent to the auto-tagging service.

Seeing Double? How to Locate Finding Duplicates

Some auto-tagging services also provide visual pattern matching and grouping technologies as a side-product which can help you find duplicate content. Relying on file name or checksums comparison is often a problem because a jpg and tiff image file can have the same content but other file names and checksums.

Services without such features might still help you find duplicates just via comparing the tags: files auto-tagged with exactly the same tags and confidence scores might be duplicates, or at least very similar which might be helpful when batch editing them manually, or making a selection of the best to include in curated collection.

Auto-Tagging Videos and Documents

Videos are gaining a lot in popularity. As a sequence of images, video is more difficult to tag properly. If videos are of importance to you, ensure that you are using a tagging service which can detect scene changes, and doesn’t just tag still images every fifth second or so. Your content management system might be able to use each scene identified by the auto-tagging service for setting markers along the timeline, which you can use as cue points when users navigate the videos.

Documents seem to be off-scope for many auto-tagging services like Clarifai. But larger players such as Google or Microsoft offer dedicated services and most content management systems nowadays index the full-text of documents for returning relevant search results.

However, if you want these documents not just searched but automatically tagged with your taxonomy, the content system needs to have some intelligence in place so that a twenty page prospectus doesn’t get tagged with everything from your defined taxonomy but rather with only those tags that matter most.

Don’t Fear Artificial Intelligence

Most librarians and taxonomists I haved talk to still condemn auto-tagged content as being “not relevantly and not-accurately” tagged, predicting that AI will “never replace a good human taxonomist”.

The fact is: the amount of content is rising to levels that can no longer be managed by human beings to the highest degree of sophistication.

While I do understand the motivation for such statements, the fact is: the amount of content is rising to levels that can no longer be managed by human beings to the highest degree of sophistication.

Not every type of content needs the full blown set of metadata assigned and accuracy in tagging is not always of the utmost importance – especially given the alternative which is content not being tagged in time or not at all.

Plus, artificial-intelligence is rapidly improving: Just think think of self-driving cars or drive assistants, security surveillance etc. which all use visual image recognition as part of a larger solution. These areas of applied AI is driving innovation much more rapidly than the content management industry.

The All-Important Human Touch

Many taxonomists point of view also misses the huge opportunity in tagging automation helping subject matter experts to focus again on things they are better at than machines, such as:

- Providing the right context and specific details which auto-tagging services have a hard time detecting (historical or corporate context, importance, implicit messages, moods and emotions).

- Reviewing select auto-tagged content for accuracy, removing what’s wrong and adding what’s lacking (which is many times faster than tagging from scratch).

- Training auto-tagging models for more accurately tagging specific content according to corporate vocabularies and needs of the organization.

- Reviewing and approving tags suggested by users or auto-tagging services, curating and maintaining multi-dimensional metadata and defining semantic relationships.

- Improving the fundament which it all should start with: your information architecture that meets your organization’s specific needs that change faster than ever over time.

If deployed well, auto-tagging services used in content systems can offer many benefits today, and even more in the very near future. It’s up to us humans to deploy these services in a way that makes sense, and to focus again on what we can still do best for a long period of time: solving complex and non-repetitive problems with plenty of context.